Extracting Tables and Text from Images Using Python

In this blog, we'll explore a complete Python solution that detects and extracts tables and text from images using libraries like Transformers, OpenCV, PaddleOCR, and easyOCR. This step-by-step breakdown includes code to detect tables, extract content from individual table cells, and retrieve any remaining text in the image.

Overview

When working with scanned documents, such as invoices or forms, it is essential to accurately extract both structured information (like tables) and unstructured text. The approach we’ll explore uses Microsoft's pretrained object detection model to locate tables and OCR techniques to extract the text from both table cells and the rest of the image.

Steps:

1. This code first detects table using microsoft's model. and save that image which contains detected table only

2. After that, from the detected table , we make a seperate image for each cell.

3. Then we read text from the image of each cell

4. Now, to read the extra texts except for the table, we colour the area where table was located with white colour and finally, read rest of the text

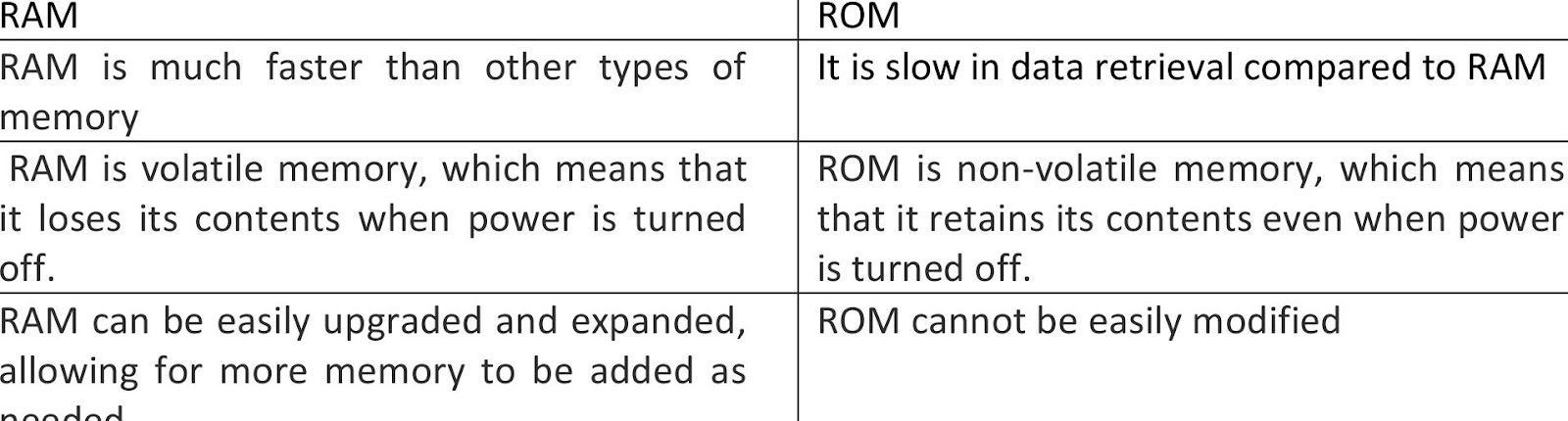

Original Image:

Detected Table Image:

Sample Cell Images:

Image with Text Only, excluding Table:

Code Breakdown

- Library Imports:

To begin, we import the required libraries:

- PIL: For opening and manipulating images.

- Transformers: Pretrained models from Hugging Face for table detection.

- torch: For working with PyTorch models.

- OpenCV: An image processing library.

- easyOCR and PaddleOCR: Libraries to perform OCR for text extraction.

from PIL import Image

from transformers import DetrFeatureExtractor, TableTransformerForObjectDetection

import torch

import cv2

import os

import numpy as np

import pandas as pd

from tabulate import tabulate

import easyocr

from paddleocr import PaddleOCR

- OCR Setup:

We initialize both

easyOCRandPaddleOCRfor reading text from images.

reader = easyocr.Reader(['en'])

ocr = PaddleOCR(use_angle_cls=True, lang='en')

- Pretrained Table Detection Model:

We use Microsoft’s

TableTransformerForObjectDetectionmodel to detect tables in the image.

model = TableTransformerForObjectDetection.from_pretrained("microsoft/table-transformer-detection")

- Paths Setup: Defining file paths for images, processed images, and text outputs.

imagesFolderPath = 'E:/AI Codes/Extract text and table from image/Images'

txt_Folder = 'E:/AI Codes/Extract text and table from image/txtFiles'

inProcessImages_Folder_path = 'E:/AI Codes/Extract text and table from image/inProcessImages'

Table Detection and Extraction

Step 1: Detecting the Table

The function detectTable performs the crucial task of detecting the table from an image using Microsoft's table detection model. The detected table is saved as a separate image file for further processing.

def detectTable(original_image_path, base_filename):

image = Image.open(original_image_path).convert("RGB")

feature_extractor = DetrFeatureExtractor()

encoding = feature_extractor(image, return_tensors="pt")

with torch.no_grad():

outputs = model(**encoding)

results = feature_extractor.post_process_object_detection(outputs, threshold=0.7)[0]

plot_results(image, results['scores'], results['labels'], results['boxes'], base_filename, original_image_path)

- How it works: The table detection model processes the image and returns bounding boxes for detected tables. The

plot_resultsfunction saves an image that contains only the detected table.

Step 2: Cropping Cells from Detected Table

Once the table is detected and isolated, the plot_results function crops the image to create separate images for each cell in the table.

def plot_results(image, scores, labels, boxes, base_filename, original_image_path):

draw = ImageDraw.Draw(image)

for score, label, box in zip(scores, labels, boxes):

# Draw bounding box around detected table

draw.rectangle(box.tolist(), outline="red", width=3)

# Save the table image

table_image = image.crop(box.tolist())

table_image.save(f'{inProcessImages_Folder_path}/{base_filename}_table.jpg')

- How it works: The bounding boxes for each cell are used to crop the table into individual cell images, which are saved for text extraction.

Step 3: Reading Text from Each Cell

The table_detection_display function processes the detected table image and extracts text from each individual cell using the OCR libraries.

def table_detection_display(img_path):

img = cv2.imread(img_path)

img_gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

# Detect vertical and horizontal lines in the table

kernel_length_v = np.array(img_gray).shape[1] // 80

vertical_kernel = cv2.getStructuringElement(cv2.MORPH_RECT, (1, kernel_length_v))

vertical_lines_img = cv2.dilate(cv2.erode(cv2.bitwise_not(img_gray), vertical_kernel), vertical_kernel)

# Segment and extract text from individual cells

table_segment = cv2.addWeighted(vertical_lines_img, 0.5, vertical_lines_img, 0.5, 0.0)

# Use OCR to extract text from each cell

result = ocr.ocr(table_segment, cls=True)

# Store extracted text

- How it works: Using OpenCV, we detect vertical and horizontal lines to isolate table cells, and then apply OCR to extract text from each cell.

Step 4: Removing the Table and Extracting Remaining Text

After extracting table content, we need to remove the table from the image and read the rest of the text. To do this, we fill the table area with a white color and then run OCR on the rest of the image.

def extractRemainingText(image_path, table_boxes):

img = cv2.imread(image_path)

for box in table_boxes:

x1, y1, x2, y2 = box

# Fill the table area with white color

cv2.rectangle(img, (x1, y1), (x2, y2), (255, 255, 255), -1)

# Save the modified image

processed_image_path = f"{inProcessImages_Folder_path}/processed_{os.path.basename(image_path)}"

cv2.imwrite(processed_image_path, img)

# Extract remaining text using OCR

result = ocr.ocr(processed_image_path, cls=True)

return result

- How it works: The table's location is filled with white color to eliminate it from the image. Then, we run OCR on the remaining parts of the image to extract any unstructured text outside the table.

Final Integration

The main loop integrates all these functions:

- Detect tables and save the cropped images.

- Process each table cell and extract text.

- Remove the table from the original image and extract the remaining text.

for filename in os.listdir(imagesFolderPath):

img_path = os.path.join(imagesFolderPath, filename)

base_filename = os.path.splitext(filename)[0]

detectTable(img_path, base_filename)

with open(os.path.join(txt_Folder, f"{base_filename}.txt"), 'w') as file:

# Extract text from the detected table

file.write(extractText(img_path))

# Extract remaining text from the image

remaining_text = extractRemainingText(img_path, table_boxes)

file.write(remaining_text)

print(f"Completed processing: {filename}")

Conclusion

This Python solution leverages table detection models and OCR techniques to handle complex image extraction tasks. Whether you're processing scanned forms or extracting tables and text from invoices, this approach provides a structured, efficient, and scalable method to extract both structured (table) and unstructured text.

Feel free to try this solution on your datasets and streamline your document processing tasks!

Comments

Post a Comment