Random Forest

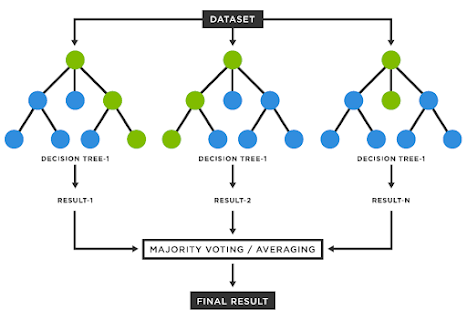

The core idea behind Random Forest is to create a "forest" of decision trees, each built on different subsets of the data and using a random subset of features. It's used for both classification (like spam detection) and regression (like predicting house prices). A type of bagging that uses decision trees to improve prediction accuracy and robustness.

How Does It Work?

- Data Sampling: Randomly pick samples from your data.

- Tree Building: Build decision trees on these samples.

- Voting/Averaging: For classification, trees vote for the most common class. For regression, the average of predictions is taken.

Example: Predicting House Prices

Imagine we want to predict house prices based on features like size, location, and age of the house.

Step-by-Step

Collect Data: Gather data on house prices along with features like size, location, and age.

Create Subsets: Randomly create multiple subsets of this data.

Build Trees: For each subset, build a decision tree. Each tree might split on different features. For example:

- Tree 1: Splits on size first, then location.

- Tree 2: Splits on age first, then size.

Predict: For a new house, each tree makes a price prediction. These predictions are then averaged.

Example in Numbers

- House 1: 2000 sq ft, Downtown, 10 years old

- House 2: 1500 sq ft, Suburbs, 5 years old

Data:

- House 1: $500,000

- House 2: $300,000

- And so on...

Random Forest:

- Tree 1 predicts $450,000

- Tree 2 predicts $470,000

- Tree 3 predicts $440,000

- Average prediction: $(450,000 + 470,000 + 440,000) / 3 = $453,333

Advantages

- Accurate: Combines multiple trees for better predictions.

- Robust: Handles large datasets and avoids overfitting.

- Random Forest deals with overfitting and outliers by averaging the predictions of multiple decision trees trained on random subsets of the data and features, which reduces variance and smooths out anomalies.

Disadvantages

- Complex: Harder to interpret than single trees.

- Computationally Intensive: Requires more resources.

Applications

- Finance: Fraud detection, stock price prediction.

- Healthcare: Disease risk prediction, patient diagnosis.

Conclusion

Random Forest is a powerful tool for making accurate predictions by combining multiple decision trees. It's versatile and robust, making it suitable for a wide range of applications.

Comments

Post a Comment